Rather than going to the NOG

III Workshop I think it is more fun to give a talk for the Capita

Selecta-course for 2nd year students on “Monstrous Moonshine”. If

I manage to explain to them at least something, I think I am in good

shape for next year\’s Baby Geometry (first year) course. Besides,

afterwards I may decide to give some details of Borcherds\’ solution next year in my 3rd year

Geometry-course…(but this may just be a little bit

over-optimistic).

Anyway, this is what I plan to do in my

lecture : explain both sides of the McKay-observation

that

196 884 = 196 883 + 1

that is, I\’ll give

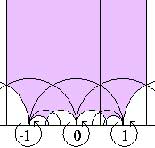

the action of the modular group on the upper-half plane and prove that

its fundamental domain is just C using the modular j-function (left hand

side) and sketch the importance of the Monster group and its

representation theory (right hand side). Then I\’ll mention Ogg\’s

observation that the only subgroups Gamma(0,p)+ of SL(2,Z)

for which the fundamental domain has genus zero are the prime divisors

p of teh order of the Monster and I\’ll come to moonshine

conjecture of Conway and Norton (for those students who did hear my talk

on Antwerp sprouts, yes both Conway and Simon Norton (via his

SNORT-go) did appear there too…) and if time allows it, I\’ll sketch

the main idea of the proof. Fortunately, Richard Borcherds has written

some excellent expository papers I can use (see his papers-page and I also discovered a beautiful

moonshine-page by Helena Verrill which will make my job a lot

easier.

Btw. yesterday\’s Monster was taken from her other monster story…

Writing a survey paper is a highly underestimated task. I once

Writing a survey paper is a highly underestimated task. I once